Instant GitHub Codespaces are dangerous without realistic data

Picture the scene:

- You click a button on a GitHub repository

- A new tab launches with VS Code containing your code

- All of the prerequisites are preinstalled and you can start coding, compiling, and running your application instantly

Amazing! Forget about walking line-by-line through “SETUP.md” to configure your local machine.

But let's fast-forward a little bit more…

- You start your web app and load it in a new tab

- You log in to your web app

- You’re greeted with an empty screen

There’s no data. There are no other users. There’s no realistic data in this application at all.

Regardless, you tentatively walk through the application generating data on the “happy path”. You finish your changes, submit your PR, merge it and deploy it to production.

Then your alerting system lights up bright red and users start reporting errors. All because some data in production breaks the assumptions you made in dev with your empty dataset.

Development in a bubble of perfect (or zero) data provides a false sense of security. Production will never look like this and you must test your code in a similar environment to production.

Trying to burst the bubble#

Web-based development environments like GitHub Codespaces can hugely accelerate your development cycle and massively reduce the time taken to get code released to production. GitHub’s own engineering team now gets a Codespaces environment for GitHub itself in 5 seconds.

But they’re only as good as the data within them. And more often than not, that’s going to start as an entirely empty dataset that only grows due to additions on the happy-path. This is because the easiest way to bootstrap your Codespace is with an empty database provisioned in the Codespace itself (typically via something like the Dockerfile that defines your codespace).

To burst the bubble you need to bring in realistic data. In the past, that would be easier as you might have production-like datasets in your local network to connect to.

When your development world suddenly shifts to the cloud, how do we get realistic datasets in those environments?

Putting the data in your docker image is a bad idea as that will dramatically slow down the time taken to both push and pull that image (diminishing a lot of the benefits of “instant” startup of these environments).

Mounting a volume is traditionally the best approach but… how on earth do we mount a data volume into a Codespace? And how do we give that data to GitHub?

We could provision a cloud-hosted database instance somewhere (something like Amazon RDS) and populate that with production-like data. But do you then share that with all colleagues who are developing in their codespace? What if someone deletes some rows, or changes the schema in an incompatible way? Now all devs are broken.

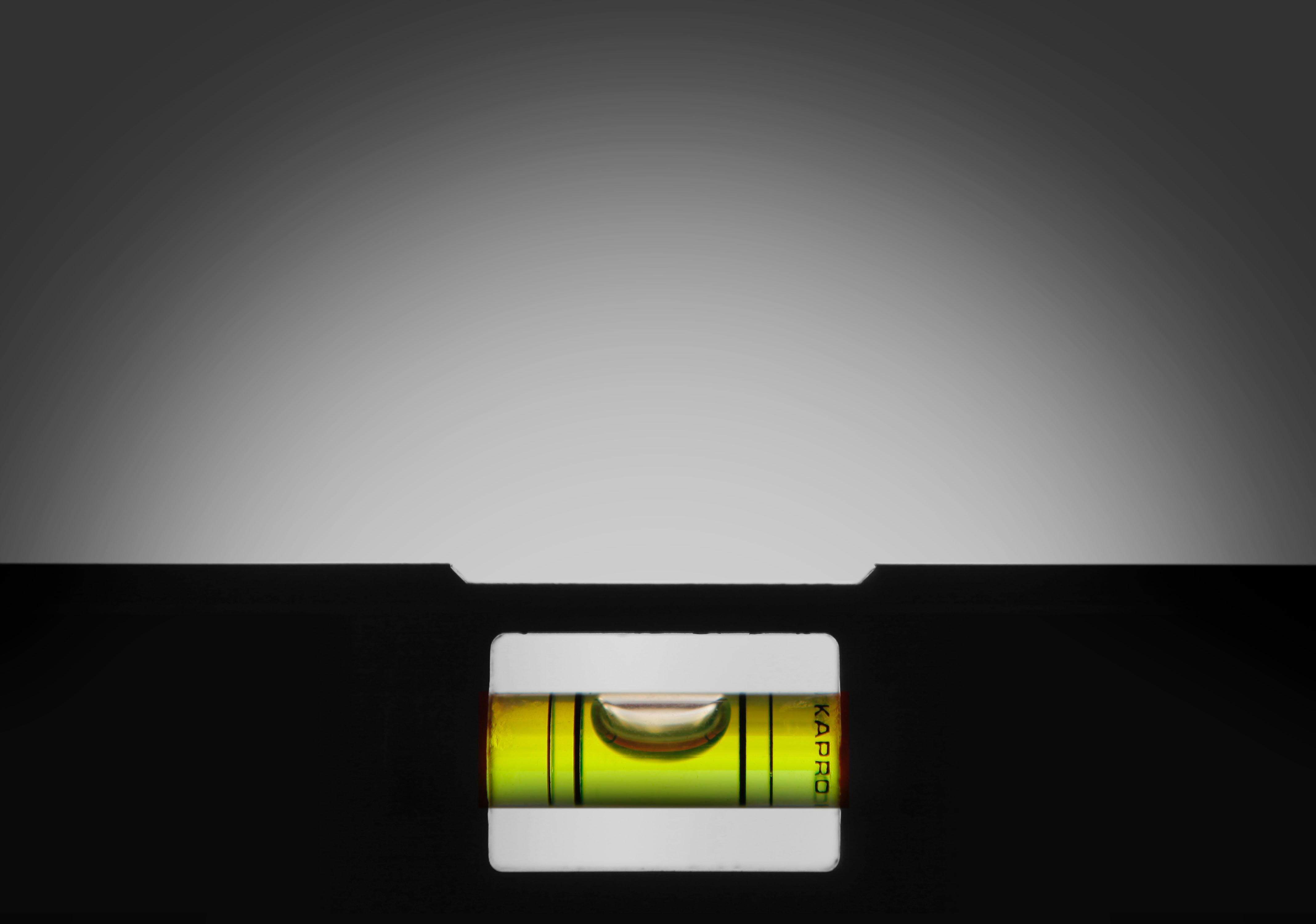

Levelling the playing field#

Solutions like GitHub codespaces are revolutionising development. But they’ve left the database and stateful services behind.

New problems like this deserve new solutions that are purpose-built with ephemeral environments in mind.

Spawn is one solution to this problem.

Spawn lets you create “data containers” which are production-like copies that can be provisioned instantly regardless of their size (check out this video to see us provision a 300GB+ SQL Server database in under 30 seconds).

You can treat Spawn data containers just like you treat GitHub codespaces: temporary environments already set up (in Spawn’s case with production-like data) instantly provisioned to accelerate development.

Spawn fits perfectly with GitHub codespaces, and we’ve written about how to set up your Codespace with Spawn here.

If you want to get realistic datasets provisioned instantly in your GitHub Codespaces with the ability to save, reset and load arbitrary database save points then check out Spawn today. It’s free to get started right now.